10. Deployment scenarios¶

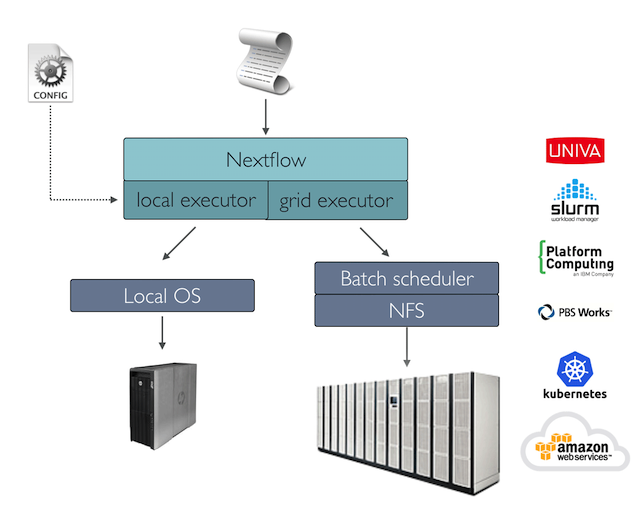

Real-world genomic applications can spawn the execution of thousands of tasks. In this scenario a batch scheduler is commonly used to deploy a workflow in a computing cluster, allowing the execution of many jobs in parallel across many compute nodes.

Nextflow has built-in support for the most commonly used batch schedulers, such as Univa Grid Engine, SLURM, and IBM LSF.

You can view the Nextflow documentation for the complete list of supported execution platforms.

10.1 Cluster deployment¶

A key Nextflow feature is the ability to decouple the workflow implementation from the actual execution platform. The implementation of an abstraction layer allows the deployment of the resulting workflow on any executing platform supported by the framework.

To run your workflow with a batch scheduler, modify the nextflow.config file specifying the target executor and the required computing resources if needed. For example:

| nextflow.config | |

|---|---|

10.1.1 Managing cluster resources¶

When using a batch scheduler, it is often needed to specify the number of resources (i.e. cpus, memory, execution time, etc.) required by each task.

This can be done using the following process directives:

| queue | the cluster queue to be used for the computation |

| cpus | the number of cpus to be allocated for a task execution |

| memory | the amount of memory to be allocated for a task execution |

| time | the max amount of time to be allocated for a task execution |

| disk | the amount of disk storage required for a task execution |

10.1.2 Workflow wide resources¶

Use the scope process to define the resource requirements for all processes in your workflow applications. For example:

| nextflow.config | |

|---|---|

10.1.3 Submit Nextflow as a job¶

Whilst the main Nextflow command can be launched on the login / head node of a cluster, be aware that the node must be set up for commands that run for a long time, even if the compute resources used are negligible. Another option is to submit the main Nextflow process as a job on the cluster instead.

Note

This requires your cluster configuration to allow jobs be launched from worker nodes, as Nextflow will submit new tasks and manage them from here.

For example, if your cluster uses Slurm as a job scheduler, you could create a file similar to the one below:

| launch_nf.sh | |

|---|---|

And then submit it with:

You can find more details about the example above here. You can find more tips for running Nextflow on HPC in the following blog posts:

10.1.4 Configure process by name¶

In real-world applications, different tasks need different amounts of computing resources. It is possible to define the resources for a specific task using the select withName: followed by the process name:

| nextflow.config | |

|---|---|

10.1.5 Configure process by labels¶

When a workflow application is composed of many processes, listing all of the process names and choosing resources for each of them in the configuration file can be difficult.

A better strategy consists of annotating the processes with a label directive. Then specify the resources in the configuration file used for all processes having the same label.

The workflow script:

| snippet.nf | |

|---|---|

The configuration file:

| nextflow.config | |

|---|---|

10.1.6 Configure multiple containers¶

Containers can be set for each process in your workflow. You can define their containers in a config file as shown below:

| nextflow.config | |

|---|---|

Tip

Should I use a single fat container or many slim containers? Both approaches have pros & cons. A single container is simpler to build and maintain, however when using many tools the image can become very big and tools can create conflicts with each other. Using a container for each process can result in many different images to build and maintain, especially when processes in your workflow use different tools for each task.

Read more about config process selectors at this link.

10.1.7 Configuration profiles¶

Configuration files can contain the definition of one or more profiles. A profile is a set of configuration attributes that can be activated/chosen when launching a workflow execution by using the -profile command- line option.

Configuration profiles are defined by using the special scope profiles which group the attributes that belong to the same profile using a common prefix. For example:

This configuration defines three different profiles: standard, cluster and cloud that set different process configuration strategies depending on the target runtime platform. By convention, the standard profile is implicitly used when no other profile is specified by the user.

To enable a specific profile use -profile option followed by the profile name:

Tip

Two or more configuration profiles can be specified by separating the profile names with a comma character:

10.2 Cloud deployment¶

Nextflow supports deployment on your favourite cloud providers. The following sections describe how to deploy Nextflow workflows on AWS.

10.2.1 AWS Batch¶

AWS Batch is a managed computing service that allows the execution of containerized workloads in the Amazon cloud infrastructure.

Nextflow provides built-in support for AWS Batch which allows the seamless deployment of a Nextflow workflow in the cloud, offloading the process executions as Batch jobs.

Once the Batch environment is configured, specify the instance types to be used and the max number of CPUs to be allocated, you need to create a Nextflow configuration file like the one shown below:

Click the icons in the code for explanations.

| nextflow.config | |

|---|---|

- Set AWS Batch as the executor to run the processes in the workflow

- The name of the computing queue defined in the Batch environment

- The Docker container image to be used to run each job

- The workflow work directory must be a AWS S3 bucket

- The AWS region to be used

- The path of the AWS cli tool required to download/upload files to/from the container

Tip

The best practice is to keep this setting as a separate profile in your workflow config file. This allows the execution with a simple command.

The complete details about AWS Batch deployment are available at this link.

Summary

In this step you have learned:

- How to configure a cluster deployment

- How to manage cluster resources

- How to submit Nextflow as a job

- How to configure process by name

- How to configure process by labels

10.2.2 Volume mounts¶

Elastic Block Storage (EBS) volumes (or other supported storage) can be mounted in the job container using the following configuration snippet:

Multiple volumes can be specified using comma-separated paths. The usual Docker volume mount syntax can be used to define complex volumes for which the container path is different from the host path or to specify a read-only option:

Tip

This is a global configuration that has to be specified in a Nextflow config file and will be applied to all process executions.

Tip

Additional documentation for AWS, GCP, and Azure are available on the Nextflow documentation site.

Summary

In this step you have learned:

- How to configure AWS Batch

- How to configure volume mounts

10.3 Additional configuration options¶

There are many different ways to deploy Nextflow workflows. The following sections describe additional configuration options for deployments.

10.3.1 Custom job definition¶

Nextflow automatically creates the Batch Job definitions needed to execute your workflow processes. Therefore it’s not required to define them before you run your workflow.

However, you may still need to specify a custom Job Definition to provide fine-grained control of the configuration settings of a specific job (e.g. to define custom mount paths or other special settings of a Batch Job).

To use your own job definition in a Nextflow workflow, use it in place of the container image name, prefixing it with the job-definition:// string. For example:

10.3.2 Custom image¶

Since Nextflow requires the AWS CLI tool to be accessible in the computing environment, a common solution consists of creating a custom Amazon Machine Image (AMI) and installing it in a self-contained manner (e.g. using Conda package manager).

Warning

When creating your custom AMI for AWS Batch, make sure to use the Amazon ECS-Optimized Amazon Linux AMI as the base image.

The following snippet shows how to install AWS CLI with Miniconda:

Note

The aws tool will be placed in a directory named bin in the main installation folder. The tools will not work properly if you modify this directory structure after the installation.

Finally, specify the aws full path in the Nextflow config file as shown below:

10.3.3 Launch template¶

An alternative approach to is to create a custom AMI using a Launch template that installs the AWS CLI tool during the instance boot via custom user data.

In the EC2 dashboard, create a Launch template specifying the user data field:

Then create a new compute environment in the Batch dashboard and specify the newly created launch template in the corresponding field.

10.3.4 Hybrid deployments¶

Nextflow allows the use of multiple executors in the same workflow application. This feature enables the deployment of hybrid workloads in which some jobs are executed on the local computer or local computing cluster, and some jobs are offloaded to the AWS Batch service.

To enable this feature use one or more process selectors in your Nextflow configuration file.

When running a hybrid workflow, -bucket-dir and -work-dir should be used to define separate work directories for remote tasks and local tasks, respectively.

For example, apply the AWS Batch configuration only to a subset of processes in your workflow. You can try the following:

| nextflow.config | |

|---|---|

- Set

slurmas the default executor - Set the queue for the SLURM cluster

- Setting of process(es) with the label

bigTask - Set

awsbatchas the executor for the process(es) with thebigTasklabel - Set the queue for the process(es) with the

bigTasklabel - Set the container image to deploy for the process(es) with the

bigTasklabel - Define the region for Batch execution

The workflow can then be executed with:

Summary

In this step you have learned:

- How to use a custom job definition

- How to use a custom image

- How to use a launch template

- How to use hybrid deployments